Practical Projects - In the beginning (Genesis)

important concepts to be aware of as we start some projects.

Hey! Whats up everyone? So I know it has been a little over 3 weeks since the last post. I will try to make a better effort at being a little more consistent. In my first blog I talked about wanting to do practical projects for the workplace, and that could be used by the everyday person that is looking to up their skills to the next level.

In doing so I understand there may be some terminology that you may not be familiar with, so I will try to find a way to successfully integrate them into the blog so you are not completely lost (or if you are new to the Information Tech/Data world). It’s also important to develop your content/ vocabulary knowledge In the field, especially if your trying to establish yourself as a data confident person.

Ok, lets get started... For this blog, and the projects going forward there will be some basic things you will need to know in order to successfully navigate what we go over. I won’t go into too much detail, but I have included some links. I plan to gradually increase the difficulty of projects, so you can gain your confidence.

Chapter 1. In the beginning...Data was created, data from above and data from below. For almost any data project there are some basic processes and steps that take place:

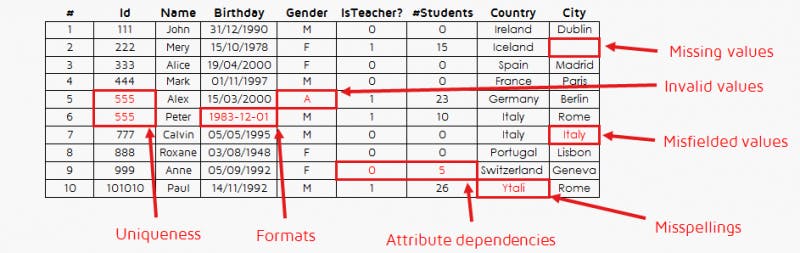

1) Data Cleaning- This is exactly what it sounds like. In many cases the raw data is not in the form it needs to be in for us to analyze or use for input into a platform such as a Database or analytics platform. In this stage you identify missing data; data that is not entered correctly (may have uppercase and lowercase); dates that are in different formats (Or not in a format you would prefer); data with spaces (this is often one you will often pull your hair over, because you often will not notice this), this list can go on.

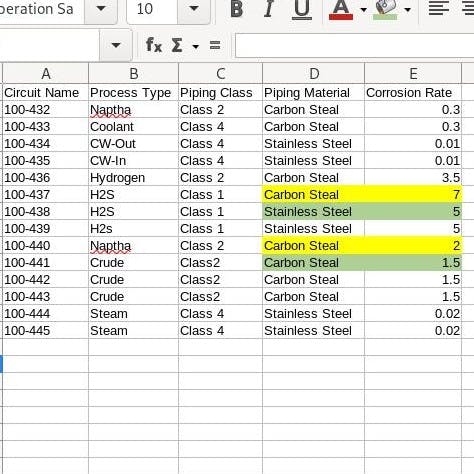

([Figure-1-Data Clean Example])(quantdare.com/data-cleansing-and-transforma..)  2) Data Prepping/Transforming- This process often overlaps with data cleaning, however in many cases its separate. Here we are separating (may need to parse data), categorizing(assign specific classes- nominal), grouping (grouping your data to visually see everything is in the correct format/spelling), assigning( assigning rates or numerical values associated with classifications),redefining (applying updated names for old ones/ or preferred names for pilot projects),cross referencing, standardizing. Figure-2 gives an example of prepping. Here we want to ensure that piping of the same classes have the same corrosion rates based on the substance. But if the piping material is different then that can either increase or decrease the rates based on its ability to fight corrosion. In this example classification here happens on two levels.

([Figure-2-Data Prep Example])

2) Data Prepping/Transforming- This process often overlaps with data cleaning, however in many cases its separate. Here we are separating (may need to parse data), categorizing(assign specific classes- nominal), grouping (grouping your data to visually see everything is in the correct format/spelling), assigning( assigning rates or numerical values associated with classifications),redefining (applying updated names for old ones/ or preferred names for pilot projects),cross referencing, standardizing. Figure-2 gives an example of prepping. Here we want to ensure that piping of the same classes have the same corrosion rates based on the substance. But if the piping material is different then that can either increase or decrease the rates based on its ability to fight corrosion. In this example classification here happens on two levels.

([Figure-2-Data Prep Example])

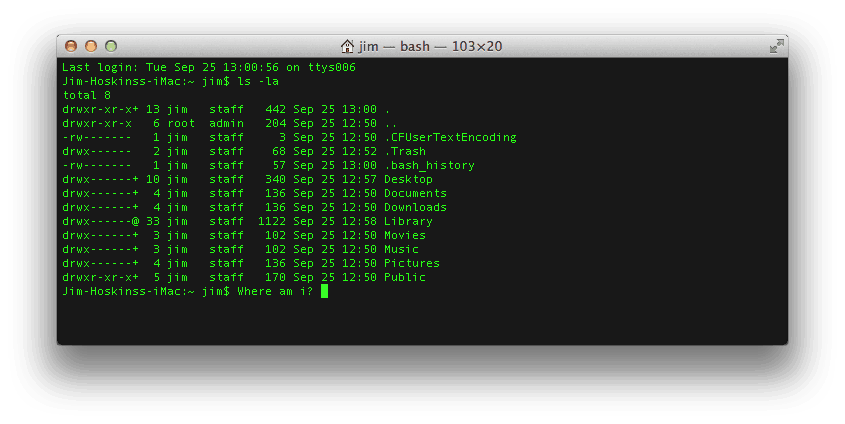

3)Script/s - Typically refers to system commands in a structure or format that allows the automation of steps, and or processes. Most often scripts are used for running commands on a OS kernel. Although, it may appear to look like programming, it isn't. Most of the commands have already been compiled and integrated into the platform. Scripts typically can often be used at the startup of a computer to run at boot, or can be used to create actions that reduce the steps someone would need to do. Scripting can refer to a short program written for PowerShell, DOS, Linux Bash or R, and can be ran from a command line interface (CLI).

3)Script/s - Typically refers to system commands in a structure or format that allows the automation of steps, and or processes. Most often scripts are used for running commands on a OS kernel. Although, it may appear to look like programming, it isn't. Most of the commands have already been compiled and integrated into the platform. Scripts typically can often be used at the startup of a computer to run at boot, or can be used to create actions that reduce the steps someone would need to do. Scripting can refer to a short program written for PowerShell, DOS, Linux Bash or R, and can be ran from a command line interface (CLI).

4) Command line- This is a terminal giving access to the back end structure of the OS Kernel. It can also be where a program is executed; such as for python and for "R". The Kernel sits right below the Operating system, and right above the hardware in its simplest form. There are different types of command lines. Just know this is where we will from time to time run scripts, or programs. See Figure-3 below.

5) Importing- The process of loading: data, a module or a script into a platform or program to be digested or viewed. In most cases if you are importing your data you have already cleaned and prepped the data and it is in its final format. An important aspect of importing is to ensure that you have completed the data validation steps. There are some exceptions such as if we are testing the output.

6)Modules- These are internally compiled programs that come nested in programs such as python, and "R". These internal programs perform routines that help us avoid the need to hard code those routines. An example of a python module is Numpy, or Matplot.

7) Jupyter Notebook- A Jupyter notebook is a program that allows us to run code, and call modules in a user friendly environment. Having access to one makes it easier to view your code and run it at the same time. It has a scripting environment and a terminal to run your code. The sessions can be saved to a folder, and can be shared quite easily with friends, and colleagues.

8) Data Modeling- "Data modeling" is the process of creating a visual representation of either a whole information system or parts of it to communicate connections between data points and structures."(IBM-Data Modeling) There are three types of data modeling: 1) Conceptual; 2) Logical; and 3) Physical. They become more detailed as you move from one to three. Review the link for more detailed information.

9) Patience/Attention to detail- I just mentioned this because it is the most important skill you will need. You don't have to have this at the onset. But you have to be willing to develop this skill. There will be many times that you can follow steps, and even copy and paste stuff, and there will be an issue. You need to be aware of how data gets changes, at different steps, and how specific actions affect the outcome. This can be fun if you like to tinker with things to see how it affects the outcome.

These are just a few basic concepts I find to be important when it comes to working with data on a low level. Feel free to look some of these definitions up and hit me up on whether you think I should add something, or if you know a good resource. I would gladly like to ad some links to resources on here.

Sources:

1) Data Cleansing, Techtarget.com-(https://www.techtarget.com/searchdatamanagement/definition/data-scrubbing); Craig Stedman

2) Data Modeling , IBM.com-(https://www.ibm.com/cloud/learn/data-modeling)